OSGi will be the end of your project

- Introduction: The Happy Dream™ of Modularity

- The Shattering

- How then?

- Threats to validity

- Concluding

- Appendix A. OSGi popularity data

- Appendix B. Measuring cost of software

Introduction: The Happy Dream™ of Modularity

The problem of maintaining software for multiple decades

The Shattering

The trap of versioning interfaces

The idea of adding versions to an interface or a contract also has a very tangible downside: you have to maintain multiple versions of software that can be argued is a partial clone.

A good example is the pubsub implementations available in Apache Celix. The pubsub zmq admins v1 and v2 have the same functionality: v2 just uses a new serialization interface instead of the old one (and refactored some things). The result is that instead of having to maintain roughly 2400 lines of code, the burden is now increased to 4800 lines of code.

One addendum here is that this is not necessarily OSGi-specific. Supporting multiple versions of anything leads to this issue:

- Having multiple versions of a docker container running simultaneously

- Having multiple versions of a protobuf/json/$YOUR_SERDE_HERE contract

Added complexity

Made for languages with reflection

Multithreaded hell

One thing that the OSGi spec simply does not address at all (okay, except for the “recent” addition of promises), is multithreading. This is deemed to be an implementation detail.

Rather, what I see in Apache Celix, Apache Felix and Amdatu is that not only the libraries/frameworks themselves are riddled with mutexes and multi threading related bugs, but I’ve also seen this in user code. As more and more cores are available to developpers and with the need for parallel computing rising as it is becoming the only way to meet higher performance targets, software will have to be made from the ground up to tackle this complexity.

Exciting new features such as Coroutines are being added to Kotlin, C++, Java (through project loom). Likewise, a plethora of other language constructs like channels and guaranteed static analysis preventing multithreading issues as is the case in Rust are also being developped.

OSGi has none of this and relies on Promises to handle async I/O. However, Promises are known to introduce something called Callback Hell. So much so in fact, that an entire site has been dedicated to it. It has been proven to be an anti-pattern by now and OSGi is only digging itself deeper with including it in the specification.

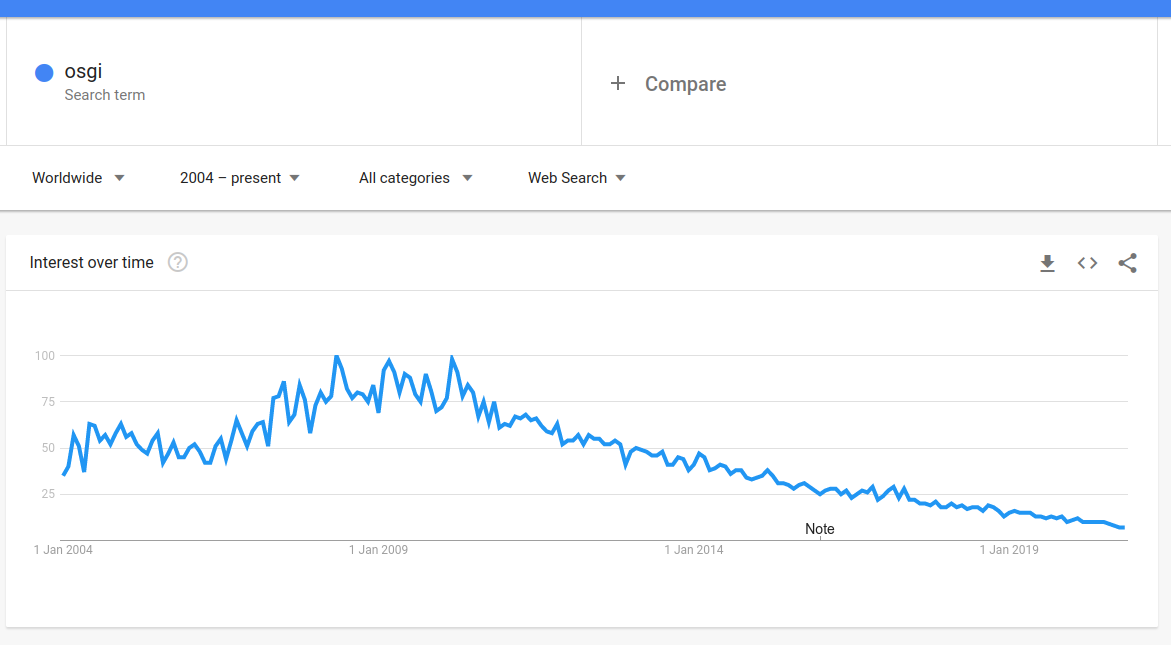

OSGi is dying

As can be seen in Appendix A. the popularity of OSGi reached a peak in 2010 and has been dropping steadily since then. Especially the graph from google trends shows that it is only a fraction of what it once was.

Using a not-very popular library in your project leads to the following issues:

- New releases are less and less likely to be created, leading to potentially unfixed security issues

- New language features (like project loom in java, or even stronger, kotlin as a disruptor in the java field) are unlikely to be added

- Support will fade away until you are the biggest expert on the library. This effectively means you now not only maintain your own code, but the library as well. See also the point on more lines of code generally leading to higher cost of development.

How then?

Reducing need for long maintenance periods

The main issue that versioning of interfaces tries to solve is supporting usages of old interfaces for longer. Generally speaking, there exist use cases where one would want to give customers of your API a grace period of updating to your new version. This is not completely avoidable and one of the things here is to just accept it and eat the increased cost. But one can still follow general rules to reduce the cost:

- Reduce the grace period (1 year to upgrade is plenty for almost all cases)

- Where possible, only add optional properties, removing the need for a new major version entirely

Add frequent updates in contracts

The main issue I see that holds back reducing the grace period is contract negotiation. Since our field is so young and unestablished, we have to deal with contracts that are build on wrong expectations a lot:

- First-time-right based

- Fixed Price

- Big tenders where waterfall still reigns

Try as much as possible to re-negotiate your contracts to become more agile. Involve the customer weekly and sometimes even daily. Release early, release often.

Versioning without duplication

Modularity without OSGi

Thread Confinement

Static Thread Creation

Actor Model

Hybrid Model

kubernetes, API, Dependency Management + Lifecycle management (osgi-lite)

Threats to validity

Valid uses of OSGi

monoliths with low update frequency?

Existing projects made with OSGi

Concluding

Appendix A. OSGi popularity data

Books

Conference presentations

The only conference really showing OSGi related talks is the Eclipse Foundation. For example, a talk on “Connect OSGi and Spring” and “OSGi Best Practices” were given on January 2020. Though the views of those talks are low. Searching on youtube for “osgi” and sorting by view count and filter on the last year, gives the following:

Doing the same without the filter on last year gives videos given years ago (skewed because they also had longer to acquire views)

But it does show that the most popular video only has 39k views. Compared to, for example, coroutines in kotlin, gives a much more popular impression:

How often is OSGi downloaded/used?

OSGi, Equinox and Felix do not appear on the following sites/blog posts:

- https://www.overops.com/blog/the-top-100-java-libraries-of-2019-based-on-30073-source-files/ see https://docs.google.com/spreadsheets/d/1QXw5TILFQCBoB0wxhNAoKabRbUm9C6TE3mD260S_gXY/edit#gid=0 for full data

- https://javalibs.com/artifact/org.osgi/osgi.core shows a little over 2K used artifacts, but dropping slightly.

- https://javalibs.com/artifact/org.apache.felix/org.apache.felix.framework Shows a very strong spike and drop-off, not sure what to make of this yet.

- https://mvnrepository.com/popular?p=4 OSGI core appears 38th on the list of popular packages, based on usages. I am not sure if this is a usable data point, given that “usages” is ill-defined and is skewed towards the entire lifetime of the product, rather than say, the past year.

Google trends

Appendix B. Measuring cost of software

ISO 25010

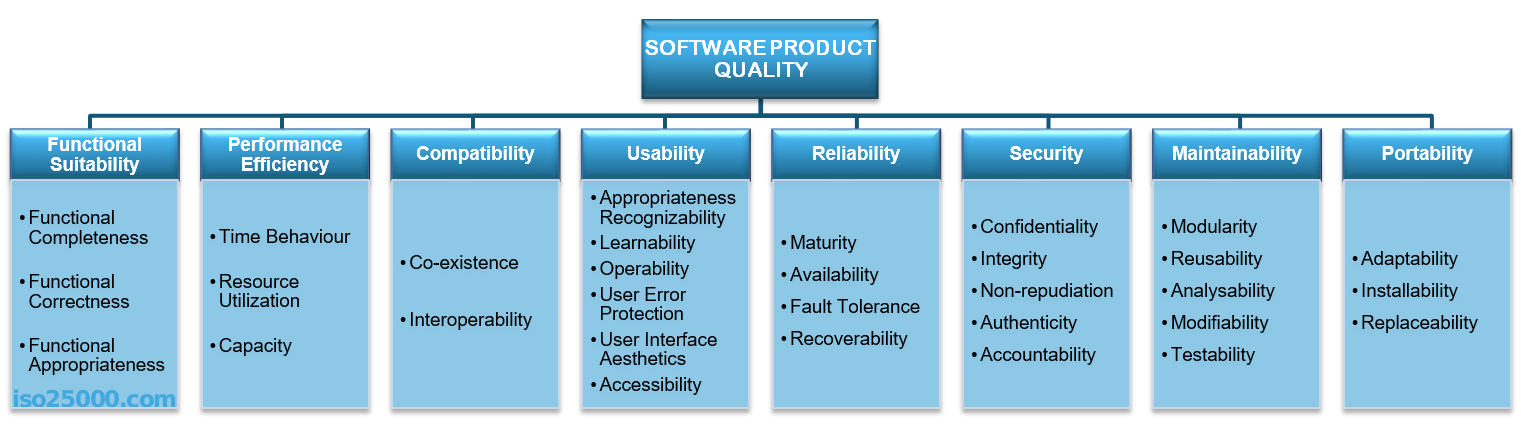

ISO 25010 is a method of measuring code quality on various axis:

The standard goes into a lot of depth which I am not going to cover here (maybe in the future), rather, I want to talk a bit about the main usage of ISO 25010: decision making based on measurements.

estimating software

Estimating software usually boils down to wanting to know how much money it cost, how long it will take and how many people should be hired. There are various methods:

- cocomo II (easy to use web-interface available)

- cosmic(function points)

- Hybrid estimation: model + humans

- And more

The general gist here is that one can establish a list of ideas that impact cost of building software. A small preview of this is:

- Higher cyclomatic complexity -> generally higher cost

- Higher amount of defects scanned by something like Coverity or SonarQube -> generally higher cost

- More input/outputs as measured by the cosmic model -> generally higher cost

- In a very, very broad sense, more lines of code -> generally higher cost

- Caveats apply, exceptions exist